2015

Blog: Make your own Parkrun barcode [Sunday 18th October 2015]

Parkrun, a popular running community, uses

barcodes to identify runners. Runners can print their own code (many laminate it, so it survives the rigors of the race and the elements) or

buy various tokens like keyfobs or wristbands or a running shirt with the barcode printed on it. One less thing to carry, but one more bill to pay.

Or you can create our own barcode, and maybe mess around with it. The Parkrun runner barcode is just the runner's unique ID number, encoded as a Code 128 (code set B) barcode - as far as I know the format is a single letter and 6 or 7 digits.

There's plenty of software that can print barcodes - for example, you can use GNU barcode to generate one:

barcode -b A9876007 -e128b -o barcode.ps

Just for fun, you can write your name on top of the barcode - as long as you leave enough space unmolested for the barcode reader to scan. This script uses

Imagemagick to do that:

#!/bin/bash

barcode -b A9876007 -e 128b | \

convert -density 300 \

- \

-flatten \

-crop 630x360+0+2920 \

-font Ubuntu-Bold \

-fill DarkRed \

-stroke white \

-strokewidth 4 \

-pointsize 21 \

-annotate +14+3010 'Parkrun Paul' \

\

out.png

which produces a barcode like this

You can print that out (on an A4 printer), to the same scale as the official Parkrun barcodes generated by their website, with this command:

lpr -o scaling=14 out.png

2014

Blog: PyCrypto's PBKDF2 with an alternate HMAC algorithm [Monday 5th May 2014]

The documentation for PyCrypto's

implementation of the

PBKDF2 (Password-Based Key Derivation Function 2) scheme isn't super clear

about how to use an alternate hash function for the pseudorandom function. Here's a little example I've cooked up.

One needs to wrap PyCrypto's own HMAC with a little function that handles the password and salt given by PBKDF2 and which emits the digest of the resulting hash. This example illustrates using the

basic hash functions available from PyCrypto itself (several of which aren't suitable for practical use, and are just included here as examples):

#!/usr/bin/python3

from binascii import hexlify

import Crypto.Protocol.KDF as KDF

from Crypto.Hash import HMAC, SHA, SHA224, SHA256, SHA384, SHA512, MD2, MD4, MD5

import Crypto.Random

password = "A long!! pa66word with LOTs of _entropy_?"

for h in [SHA, SHA224, SHA256, SHA384, SHA512, MD2, MD4, MD5]:

hashed_salted_password = KDF.PBKDF2(password,

Crypto.Random.new().read(32), # salt

count=6000,

dkLen=32,

prf=lambda password, salt: HMAC.new(password, salt, h).digest())

print ("{:20s}: {:s}".format(h.__name__,

hexlify(hashed_salted_password).decode('ascii')))

Blog: Did Boris Yeltsin inspire Game of Thrones? [Sunday 20th April 2014]

In George R. R. Martin's fantasy series

A Song of Ice and Fire, the kings of the unfortunate land of Westros (not Nova Scotia) sit on a throne made from hundreds of swords, which is supposed to look like

this. The swords are those

of an ancient king's enemies, and the uncomfortable chair they comprise serves to remind the incumbent monarch of the precarious, painful nature of lordship. It's a neat analogy; but did Martin invent it, or was it (of all unlikely fantasy authors) Boris Yeltsin?

In a speech on TV in 1993, three years before A Game of Thrones (the series' first book) was published, Yeltin said "You can build a throne with bayonets, but it's difficult to sit on it" (reference). The normally pretty thorough TV

tropes doesn't have like it that predates either (trope: Throne made of X) - obviously both Yeltin's and Martin's thrones owe a lot to the Sword of Damocles.

To Martin, of course, it's a fun fantasy in a magical land of boobies and dragons. To Yeltsin thrones were no abstract plaything - for him, as for Henry VI, uneasy lies the head that wears a crown. Perhaps uneasy sits the bum underneath it too.

Blog: Why you want a driverless car: not to drive you around [Thursday 17th April 2014]

Driverless cars are coming, sometime and maybe. Some of us, at least some of the time, still enjoy driving. But even if you never let the car drive itself when you're in it, even if you always drive to pick people up and drop them off, there are plenty of reasons why you might

let the car drive itself when you're not in it. The additional capabilities this gives the car may change the way we use cars at least as much as when we're in them.

A lot of the driving you do in your car is ancillary to owning a car, rather than being to service the things you want. If the car can drive itself around, some of that goes away. And there's some routine stuff it can do too. Consider these possibilities:

- You drive to the city centre to go shopping. But you don't drive around looking for parking, you just drive to where you want to go, get out, and say "go find a parking space" and the car obeys. When you need it back, you can summon it with your phone.

- The car is due a service. You just go to work as usual, but when you get there you just get out at the office and send the car off to the dealer. Once it's done, the car comes back and parks in the office lot.

- Your daughter phones you from school. She's forgotten her sports clothes, which she needs for third period. You find her sports bag and put it in the trunk of the car. You send it off to her school. When it gets there it parks in the school lot and messages her; at the end of the next period

she comes out and gets the bag from the car (which unlocks only for her).

- You paid for that car that's sitting idle in your garage right now. If you know you won't need it for the rest of the day, if it's self-driving you can rent it out via a car-share site for a few hours to someone else. It'll deliver itself to them when they've booked it, and when they're done

it'll return and park back at your house.

- The car is electric, but your office (mall, cinema, apartment complex) doesn't have enough inductive chargers for every electric car. You turn over (limited) control to the building's valet-manager system, which shuffles all the cars around over their stay, making sure each gets enough charge

to get the driver home.

This last part is maybe the best part. Why does your office, or your apartment building, even have parking next to it? With self-driving cars, it doesn't need to - it can contract parking from some parking lot blocks, even miles away. And valet parking (which is what happens when all the cars in

the lot are self-driving, and are controlled by the lot's valet manage system) can manage where every car is kept. With cars parked millimetres apart (no need to open the doors) bumper to bumper, it can manage double or triple the density of a normal lot. You tell it when when you'll need it, and

the system can make sure the car is shuffled around when it's time. If you have a genuine emergency (which means you can't wait five minutes for an emergency shuffle to occur) the lot will send a taxi.

With self-driving cars, particularly electric ones, the future may spell bad news for parking attendants, taxi drivers, and filling station cashiers.

Adapted from my reddit post of yesterday.

Blog: The pointless Pono [Monday 17th March 2014]

Neil Young (yes, him) is backing a Kickstarter for a new high-definition portable media player,

Pono. It's daft.

Pono promises high quality, high definition digital portable music. CD-DA isn't a perfect format, and munged through mp3 codecs it's a bit worse, with data compression, loudness, range compression, and simply there (usually) being only two channels. In an ideal listening environment, with decent

speakers and a quiet room, this might possibly matter. But in a portable environment it just doesn't.

The track record of better-than-CD-quality digital audio isn't a good one anyway. HDCD, SACD, and DVD-A never made much of a dent. In part people just don't care enough (perhaps they're ignorant of the wonders they're missing, perhaps not), and in part copyright holders are reluctant to put such

a high-quality stream in their customers' hands (knowing how readily media DRM schemes have been cracked). Perhaps it's time for another crack at getting a high quality digital music standard off the ground. Perhaps iTunes and SoundCloud and Facebook have altered the music marketplace enough that

there's room to market better product right to the consumer. A coalition of musicians, technologists, and business people maybe can establish a new format, one that can be adopted across a range of digital devices and can displace mp3 (which is, I'll happily admit, rather long in the tooth).

But portable media is a dumb space in which to do it. And building your own media player is dumberer. People listen to portable players when they're in entirely sub-optimal listening environments. They're on the train, in the back of the car, they're in the gym or they're walking or their

running down the road. There's traffic noise, wind and rain, other people's noises, and the hundred squeaks and groans of the city. And they're listening on earbuds or running headphones or flimsy Beats headphones. The human auditory system is great at picking out one sound source from the miasma,

but it can only do so for comprehension not for quality too. High quality audio in all these enviroments is wasted - it's lost in the noise.

When I started running, I had a hand-me-down Diamond Rio PMP300. It came with only 32MB of internal storage - that's barely enough for a single album encoded at a modest compression level. So I broke out Sound eXchange and reduced my music to the poorest quality I could manage, and eventually reduced it to mono too. With that done, I could get a half-dozen or so albums on the player, enough that I could get enough variety that each run didn't just follow the

same soundtrack. With skinny running headphones, the wind and the rain, the traffic noise, and the sound of my own puffing and panting, it just didn't matter that the technical qualities of the sound were poor. And now, a decade later, when I have a decent phone that plays high quality mp3s, it

doesn't really sound any different in practice.

So even if people buy Ponos, even if media executives decide to sell them high-definition digitial audio, if they pay (again) for all the music, in the environments most people will be using their Pono, the difference will be, in practice, inaudible.

2013

Blog: The zombie invasion of Glasgow [Friday 25th October 2013]

The introductory zombie scene in the film

World War Z, while set in Philadelphia, was filmed in central Glasgow, around the City Chambers and George Square. I know that part of Glasgow pretty well, but they really did an excellent job of making it look American and

disguising its weegieness. I've pored over the entire scene, and here (mostly shot by shot) is a breakdown of where the shot was taken, with Google Maps links.

For each entry I show the time (into the UK DVD edition of the film, in minutes:seconds), the line of dialog roughly about that time, a description of the streets shown, and a Google Maps link (you might need to rotate the camera in a few of them):

- 04:56 20 questions - Junction of Cochrane St. and Montrose St., looking west up Cochrane St.

(map)

- 05:35 "what is going on?" - Junction of Cochrane St. and John St., looking west up Cochrane St. Emporio Armani visible on the left.

(map)

- 06:26 "you all right?" - Cochrane St., west of Montrose St., looking east down Cochrane St. The Bridal Studio visible in the distance.

(map)

- 07:14 "I want my blanket" - Junction of Cochrane St and South Frederick Street. Statue of scientist Thomas Graham with The Piper behind it.

(map)

- 08:17 "out of the way" - George Street, between North Frederick Street and John Street, looking east down George Street. A sign for US30 over the Ben Franklin Bridge in Philadelphia is present. In

reality we're about 100m north of the garbage truck crash.

(map)

- 08:34 "ahh!" - Helicopter shot over Glasgow City Chambers' central tower looking west over George Square. The camera pans clockwise until we're looking roughly at #5

(map)

- 08:41 "aargh" George Square at North Hannover Street looking east down George Street. The white base of the statue of Gladstone is visible right of frame.

(map)

- 08:44 faces of running people - As #5, but looking west back into George Square. The Tron Church is replaced by a glass skyscraper.

(map)

- 09:22 "seven" - George Square (West George Street) at North Hannover Street, looking westward down George Street. Now the Tron Church is present.

(map)

- 09:49 starting the RV - High shot from #5, showing the north facade of the City Chambers

- 10:05 more running - I'm confused about this one - it may be a computer collage

- 10:06 racing toward bridge - Again as #5, looking east down George Street. But the blue metalwork of the Ben Franklin Bridge is visible

- 10:08 roadblock - On the bridge: woosh, we're on the bridge on the Camden NJ shore, looking west into the Philly.

- 10:13 zombies teeming through George Square - Another helicoper shot, this time over Queen Street looking eastward at the front of the City Chambers. The crashed garbage truck is in the right place.

(map)

- 10:17 helicopter - view from the back of a helicopter, of Philly's Liberty Place

Blog: Changing the Gnome/Unity background in Python [Friday 4th October 2013]

It's easy to change the desktop background from Python, without using an external program.

Here's a basic example for Gnome (including Unity) on Linux:

#!/usr/bin/env python3

from gi.repository import Gio

bg_settings = Gio.Settings.new("org.gnome.desktop.background")

bg_settings.set_string("picture-uri", "file:///tmp/n2.jpg") # you need the full path

# you can also change how the image displays

bg_settings.set_string("picture-options", "centered") # one of: none, spanned, stretched, wallpaper, centered, scaled

You'd think that it would be just as straightforward to do this on KDE4; it was possible with pydcop on KDE3, but I can't find a way of doing on KDE4 with dbus. I've seen some postings which suggest that they're almost at the point of adding support for this.

Blog: File magic for the constituent parts of Linux RAIDs [Saturday 15th June 2013]

Curiously, the

magic(5) for

file(1) on my machine doesn't recognise the format of superblocks which make up a Linux RAID, instead simply reporting them as "data".

This Python2 program dissects the header of a given block and shows some information about it and the RAID volume of which it is a constituent.

#!/usr/bin/python

"""

Linux RAID superblock format detailed at:

https://raid.wiki.kernel.org/index.php/RAID_superblock_formats

"""

import binascii, struct, sys, datetime, os

def check_raid_superblock(f, offset=0):

f.seek(offset) # start of superblock

data = f.read(256) # read superblock

(magic,

major_version,

feature_map,

pad,

set_uuid,

set_name,

ctime,

level,

layout,

size,

chunksize,

raid_disks,

bitmap_offset # note: signed little-endian integer "i" not "I"

) = struct.unpack_from("<4I16s32sQ2IQ2Ii",

data,

0)

print "\n\n-----------------------------"

print "at offset %d magic 0x%08x" % (offset, magic)

if magic != 0xa92b4efc:

print " <unknown>"

return

print " major_version: ", hex(major_version)

print " feature_map: ", hex(feature_map)

print " UUID: ", binascii.hexlify(set_uuid)

print " set_name: ", set_name

ctime_secs = ctime & 0xFFFFFFFFFF # we only care about the seconds, so mask off the microseconds

print " ctime: ", datetime.datetime.fromtimestamp(ctime_secs)

level_names = {

-4: "Multi-Path",

-1: "Linear",

0: "RAID-0 (Striped)",

1: "RAID-1 (Mirrored)",

4: "RAID-4 (Striped with Dedicated Block-Level Parity)",

5: "RAID-5 (Striped with Distributed Parity)",

6: "RAID-6 (Striped with Dual Parity)",

0xa: "RAID-10 (Mirror of stripes)"

}

if level in level_names:

print " level: ", level_names[level]

else:

print " level: ", level, "(unknown)"

layout_names = {

0: "left asymmetric",

1: "right asymmetric",

2: "left symmetric (default)",

3: "right symmetric",

0x01020100: "raid-10 offset2"

}

if layout in layout_names:

print " layout: ", layout_names[layout]

else:

print " layout: ", layout, "(unknown)"

print " used size: ", size/2, "kbytes"

print " chunksize: ", chunksize/2, "kbytes"

print " raid_disks: ", raid_disks

print " bitmap_offset: ", bitmap_offset

if __name__ == "__main__":

if os.geteuid()!=0:

print "warning: you might want to run this as root"

if len(sys.argv) != 2:

print "usage: %s path_to_device" % sys.argv[0]

sys.exit(1)

filehandle = open(sys.argv[1], 'r')

check_raid_superblock(filehandle, 0x0) # at the beginning

check_raid_superblock(filehandle, 0x1000) # 4kbytes from the beginning

and here is additional

magic(5) data to recognise RAID volumes. It's incomplete - I've only been able to test it with RAID volumes I've been able to create myself.

# Linux raid superblocks detailed at:

# https://raid.wiki.kernel.org/index.php/RAID_superblock_formats

0x0 lelong 0xa92b4efc RAID superblock (1.0)

>0x48 lelong 0x0 RAID-0 (Striped)

>0x48 lelong 0x1 RAID-1 (Mirrored)

>0x48 lelong 0x4 RAID-4 (Striped with Dedicated Block-Level Parity)

>0x48 lelong 0x5 RAID-5 (Striped with Distributed Parity)

>0x48 lelong 0x6 RAID-6 (Striped with Dual Parity)

>0x48 lelong 0xffffffff Linear

>0x48 lelong 0xfcffffff Multi-path

>0x48 lelong 0xa RAID-10 (Mirror of stripes)

0x1000 lelong 0xa92b4efc RAID superblock (1.1)

>0x1048 lelong 0x0 RAID-0 (Striped)

>0x1048 lelong 0x1 RAID-1 (Mirrored)

>0x1048 lelong 0x4 RAID-4 (Striped with Dedicated Block-Level Parity)

>0x1048 lelong 0x5 RAID-5 (Striped with Distributed Parity)

>0x1048 lelong 0x6 RAID-6 (Striped with Dual Parity)

>0x1048 lelong 0xffffffff Linear

>0x1048 lelong 0xfcffffff Multi-path

>0x1048 lelong 0xa RAID-10 (Mirror of stripes)

Blog: A complete logical system based on material implication [Friday 26th April 2013]

A few days ago I watched R. Stanley Williams' presentation

Finding the Missing Memristor, which talks about building digital systems from

memristor, a novel basic

electronic component. Near the end, Williams talks about a memristor lending itself to easily building a

material implication logic element (at

38 minutes into the

presentation). He mentions, but doesn't go into detail, that with only this primitive, and a predefined "False", one can build the full set of

basic logic elements.

I'd long been familiar with the idea that one could do so from NAND gates (building a complete NAND logic) and similarly from NOR

gates (producing a complete NOR logic). Indeed, every current digital electronic system is build from one of these two schemes. But I didn't know about the completeness of implies-logic, and I'll confess to being a bit intimidated by

Principia Mathematica. So I figured I'd work through building the system myself. Here goes.

We start with the material implication operator →, which has the following truth table:

More of interest to logicians than electronic engineers, note that x → x is always True, for either value of x. So, logically speaking, it's fair to say that material implication gives rise to True (that having True a priori isn't necessary); the same isn't true for False. Someone

building an actual digital circuit with memristors isn't really going to care, because a logical True and False (presumably a high and low voltage feed) are always readily available anyway. I don't know enough about the actual implementation of a memristor → gate to know whether, if you just

tied the two input lines together (and not to an input line from elsewhere in the circuit), you'd actually get a consistent True level out of it (but I'm guessing you wouldn't).

With that, we can build an OR gate (denoted as ∨)

p ∨ q is (p → q) → q

And negation, an inverter gate (denoted as ¬):

¬p is p → F

With not and negation we can build a logical NOR (denoted with Peirce's arrow ↓)

p ↓ q is ¬(p ∨ q)

so

p ↓ q is ((p → q) → q) → F

...and from that point we could follow the pattern of NOR logic and build the rest of the system. Just for completeness, we can build and (∧) and nand (↑)

p ∧ q is (¬p) → (¬q)

p ↑ q is ¬(p ∧ q)

Finally we can construct xnor (logical equality, denoted =) and exclusive or (xor, denoted ⊕)

p = q is (p ∧ q) ∨ (p ↓ q)

and

p ⊕ q is ¬=

Blog: Cyberwar on /North Korea/? [Saturday 20th April 2013]

Apparently Anonymous, that vaguely extant hacktivist group that lazy journalists like to write about when they're Googling for news rather than going out and doing their actual job, has threatened to declare "cyberwar" on the fancifully named Democratic People's Republic of Korea [link].

Cyberwar on North Korea? That seems as likely to be effective as declaring cyberwar on House Lannister.

Blog: To cure you they must kill you [Wednesday 3rd April 2013]

I spent a little time fixing someone's Windows system. Because, you know, Windows is easy to use. It didn't have a virus; it just had an inexplicable amount of anti-virus. It seems that the anti-virus program's chief strategy to fight virus infection is to just fill up all the

memory and use all the CPU cycles itself, so there's no headroom left for the viruses to get in. It's a bit like trying to cure yourself of a blood disease by injecting your veins with bath sealant.

Blog: Is Valve's destiny THREE? [Thursday 10th January 2013]

Here's a weird little pet theory for you. Consider our friends over at Valve Software, makers of the excellent

Half Life 2,

Portal 2,

Left4Dead 2,

Dota 2, and

Team Fortress 2. That's a whole lot of 2s. Of these,

Half Life 2 is the

oldest, and the next chapter of the series the most painfully overdue. So where do all these unrelated games go from here? Well, to

THREE, obviously.

Given Valve's fondness for being silent until they're sure they're in a good position to deliver, it's easy to go from casual imputation to wild fantasy. So, without any evidence at all, here's my wild guess about the future of each of Valve's core game titles:

- All these titles exist in the same fictional universe ("Valviverse?"). Half Life and Portal are already (slightly) interrelated, and it's not hard to figure out how to incorporate the anarchy of Left4Dead's Green Flu

zombie pandemic into Half Life's Seven Hour War, as perhaps a Combine bio-weapon meant to soften up resistance and thin out the human population before and after their primary military operation. I'll admit that it's harder to work

the cartoonish mayhem of Team Fortress and the fantastical lunacy of Dota, as they're not really very compatible with the gritty world of Half Life. The usual scriptwriter follies are still available - alternate dimensions, distant past or future, VR simulation, and (my

favourite) "you broke reality with your damn manchine".

- So let's call all these games THREE - but I'm not suggesting that Value consolidate these different franchises (each a distinct genre, with different fanbases) into a single game. They obviously need each individual game to remain a distinct product, but tying them together into a single

milieu makes each work for the other.

- The story unfolds through each episode, and through subsequent DLC for each.

Why would Valve do this, when they're already swimming in money, and when each franchise is individually healthy (if we consider Half Life's lengthy state of hybernative naptosis to be healthy? Because Valve need to sell as many of their forthcoming Steam Box PC/console/TV hybrid thing as possible. And rather than simply boast a bunch of sequels as launch content, weaving the whole thing into a major event can

only enhance the press coverage and gamer hunger for the thing.

If they do this, Valve's track record suggests they'll tease it somehow, perhaps by an ARG, or by some subtle under-the-wire DLC. With everything already updated on Steam for technical reasons, it'd be easy for them to alter some

noticeboards or leave the odd corpse from one game in another.

Now, that's thinking with portals.

2012

Blog: Aliens vs. Thanksgiving [Tuesday 20th November 2012]

It's at this time of year that millions of college-age Americans return to the family home and to their childhood place in the family structure. Some can decide whether to sit at the kids' table or the adults'. If they choose the adult table, they'll hear an hour-long lecture about how Barack

Obama is an evil alien who is out to destroy America; if they sit at the kid table, they'll hear an hour long lecture about how Megatron is an evil alien who is out to destroy America.

Does this mean that Barack Obama is really Megatron?

Blog: Great Scottish Run 2012 [Tuesday 4th September 2012]

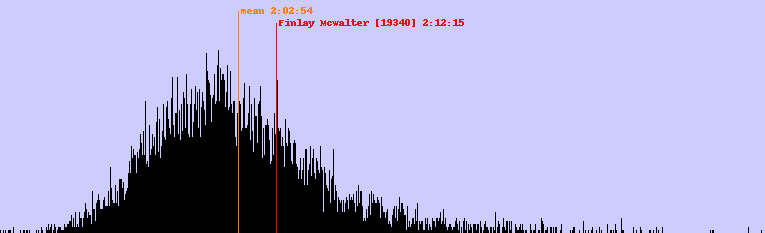

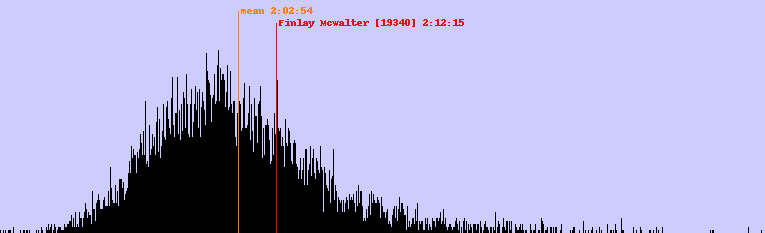

As with last year, I did the Great Scottish Run (a half marathon around Glasgow) on Sunday. This time I did it in 2:12:15, three minutes slower than last year.

Although it was a little cooler than last year, and I was better hydrated and probably better prepared, I still ran a bit slower - I really don't know why. Running past someone on Ballater Street who was being treated by paramedics, and was clearly having a serious problem, surely made me decide to

slow down a bit. Plus being a year older and 3kg heavier than last year didn't help.

This year the winning time was 1:03:14, with the mean finishing time 2:02:54 and the most popular finishing time 1:57:44. The graph showing the distribution of runners (new improved with labels) is very much like last year's distribution:

The Python2 code to generate the graph is below. It'll probably need to be tweaked for subsequent years, as they're not very consistent about the CSV data dump.

#!/usr/bin/env python2

# config parameters

# a dictionary giving the runner number and the colour we're going to draw their line as - their

# name is extracted from the CSV ffile.

runners_to_show = { '19340': {"colour": 'red'},

}

RESOLUTION=15 # how many seconds correspond with each horizontal pixel

VSCALE=3 # vertical multiplier

# ##############################################################

import csv, sys, Image, ImageDraw

# overall stats

mintime = 10000

maxtime = 0

count = 0

totaltime = 0

# gender totals

boycount = 0

boytotal = 0

girlcount = 0

girltotal = 0

# the census has one bucket for each "slice"

census = [0]*(RESOLUTION*1000)

most_popular_time = 0

most_popular_count = 0

def parsetime(t):

"convert an h:m:s time into a number of seconds"

h,m,s = t.split(':')

return int(h)*3600 + int(m)*60 + int(s)

def unparsetime(t):

"convert a number of seconds into a hh:mm:ss string"

t = int(t)

hours = t/(60*60)

t -= (hours*60*60)

mins = t/60

t -=(mins*60)

return '%d:%02d:%02d' % (hours,mins,t)

try:

infile = open ('2012GSRHalfMarathon.csv','r')

except IOError:

print "error opening input file"

sys.exit(1)

reader = csv.reader(infile)

for row in reader:

place, number, time, forename, surname, gender, age, club, split1, split2, split3, filler = row

# do some stats

sectime = parsetime(time) # time in seconds

if sectime < mintime: mintime = sectime

if sectime > maxtime: maxtime = sectime

count += 1

totaltime += sectime

if gender == 'M':

boycount += 1

boytotal += sectime

else:

girlcount += 1

girltotal += sectime

# is this row the entry for a runner we're particularly interested in

for k in runners_to_show:

if k == number:

runners_to_show[k]['name'] = forename+" "+surname

runners_to_show[k]['time'] = time

runners_to_show[k]['sectime'] = sectime

print 'found runner', k, runners_to_show[k]

# keep a census for each possible finishing time

index=sectime/RESOLUTION

census[index] += 1

if census[index]> most_popular_count:

most_popular_count = census[index]

most_popular_time = sectime

# show the results of our pass through the data

print 'mintime', mintime, unparsetime(mintime)

print 'maxtime', maxtime, unparsetime(maxtime)

meantime = totaltime/float(count)

print 'meantime', meantime, unparsetime(meantime)

print 'most popular finishing time: %s (%d people)' % (unparsetime(most_popular_time),

most_popular_count)

# render an image, with a histogram for the census

minbucket = mintime/RESOLUTION # the leftmost bucket

image_width = (maxtime-mintime)/RESOLUTION

image_height = (most_popular_count*VSCALE) + 50 # 50 is padding at the top

im = Image.new('RGB', (image_width, image_height), '#ccf')

draw = ImageDraw.Draw(im)

# draw the overall histogram

for x in xrange(image_width):

draw.line([(x,image_height),

(x,image_height- (VSCALE*census[x+minbucket]))],

"black")

texttop = 5

# draw mean line

x = (meantime-mintime)/RESOLUTION

draw.line([(x,image_height),

(x,11)],

'#FF7F00')

draw.text((x+3,texttop),

"mean " + unparsetime(meantime),

'#FF7F00')

texttop += 12

# draw each of the specified runners' times

for racenum,data in runners_to_show.iteritems():

x = (data['sectime']-mintime)/RESOLUTION

draw.line([(x, image_height),

(x, texttop+6)],

data['colour'])

draw.text((x+3,texttop), "%s [%s] %s" %(data['name'],

racenum,

data['time']),

data['colour'])

texttop += 12

del draw

im.save('graph2.png')

edit: I later discovered that the runner who was being treated, Aubrey Smith, died. There's a weird fraternity between runners, and when one of us falls we all hurt.

Blog: Under Google's bonnet [Tuesday 8th May 2012]

I've just noticed that in Google Chrome's settings screen, the section with all the technical stuff is called "Under the Bonnet" in the en-uk version, and "Under the Hood" in the en-us. It's a little thing, and in itself it doesn't really matter (I doubt many UK users wouldn't

know what "under the hood" means), but it does show that someone is paying attention to the details, which has a positive halo effect for one's perception of the rest of the product.

Blog: ipod nano replacement [Saturday 5th May 2012]

Back when it first came out in 2005, I bought one of the first generation of Apple iPod nanos. It's been a few years since I retired it, replacing it with an iPod Touch and then an Android phone - but I'm pretty good about storing old things like this properly, with all the

necessary equipment and in good order. It seems Apple are worried these old things pose a fire risk, so they're replacing them.

Apple's page about the replacement program calls it a "safety risk" without providing much detail; Wikipedia's article mentions a few overheating events (but really not

a lot). Still, Apple are clearly worried about their liabilities. So they took back the old one (a black 2GB model) and they've sent a new 8GB silver one.

It's slightly strange to get an Apple product like this. There's none of the usual Apple "unboxing" experience, because there's no box at all. The Nano came in a generic shipping box (one downright Brobdingnagian when compared with the tiny size of the player). No manual, no cable, no software,

just the tiny Nano with a serial number sticker.

I'm surprisingly sentimental about technology. I still have every mobile phone I've ever owned (though I've given one to a relative) and, until this, every mp3 player too, right back to a Diamond Rio PMP300. If it hadn't been for the fire

risk, which really prevents me from lending the old thing to someone else, I'd probably have kept it.

Still, the new Nano is an impressive little thing. It weighs very little (it's almost light enough that one could keep it only on the cable), the built-in clip is a nice idea, and the touchscreen works nicely. The build-in pedometer doesn't compare very well with a decent Oregon Scientific one,

however - it seems to be rather arbitrary and unresponsive.

2011

Blog: In praise of the Shire calendar [Tuesday 20th December 2011]

It's almost the festive season, and following that we'll be changing the calendars to another year. The current Gregorian calendar that we use is a mad palimpsest of the last two thousand years of calendar craziness. In combination with the incomfortable facts about the

Earth's orbit (which don't lend themselves to any neat symetrical solution), we're left with 14 different calendar configurations and an odd hodge-podge of months.

These Johns

Hopkins researchers propose a simpler system, but it has a "leap week" ever so often - so it's hardly a rational improvement. But this problem was solved decades ago, by the unlikeliest of inventors.

The most elegant solution to the calendar I've seen is JRR Tolkien's (yes, him) Shire Calendar:

- It's fully conformant with the astronomical realities (no magical even-divisions or date fudging necessary).

- There are still 12 months (so no weird decimal months, no 34th of Thermidor bollocks). You can stick with the familiar month names (rather than Tolkien's Hobbity ones).

- Each month is 30 days long (simplifying accounting, pay calculations, holiday accrual etc.). No pointless variation, no mnemonics.

- Year on year, a given month always begins with the same day of the week. Even for leap years. So if you were born on a Tuesday, your birthday will always be Tuesday.

- The clever part (which allows all the other stuff to happen) is there is a winter festival holiday (2 days) and a summer festival holiday (3 days normally, 4 in leap years). These aren't week days and aren't in a month - they're special. So e.g. Christmas doesn't change between sometimes being

in the weekend, or adjacent to the weekend, or midweek - Christmas is always in the same place. I know I always get disoriented around Christmas - Christmas already seems like a special day which doesn't resemble a Thursday or a Sunday or whatever - the Shire Calendar is just a realistic expression

that it's not a weekday, and that it shouldn't be regarded as one. And the first day back at work after Christmas is always a Monday.

- The winter and summer festivals are pretty consonant with common practice in many countries anyway. Move Christmas into the yule holiday (Jesus wasn't born in December anyway, so it's no less Biblically correct than current practice). Many countries have a midsummer festival or summer bank

holiday and US independence day can be celebrated then.

- You only need one printed calendar (not the 14 different types we currently need) - you just score off the leap year or not.

- Its easy to fix the locations of other festivals, like Thanksgiving, and then you get a perfectly consistent gap between e.g. Thanksgiving and Christmas

- From a software perspective it's a wash - 2 more mini-months need to be handled, but less bother with differently lengthed months and much easier day-of-the-week calculations.

Adapted from my Slashdot post here

Blog: What men and women want from movies [Thursday 20th October 2011]

What women want from a movie:

- in the beginning the people meet

- in the middle they argue

- in the end they get together

What men want from a movie:

- in the beginning the people meet

- in the middle they get together

- in the end they get eaten by dinosaurs

Unless film makers do something cheesy like a

fake ending (which women are somehow tricked into missing), these two rubrics aren't compatible.

Blog: This human form I now repent [Tuesday 20th September 2011]

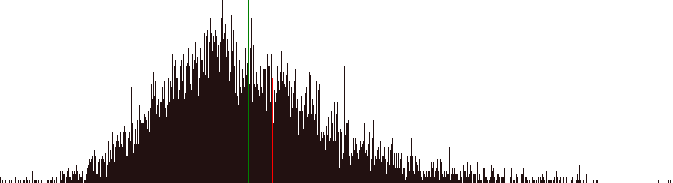

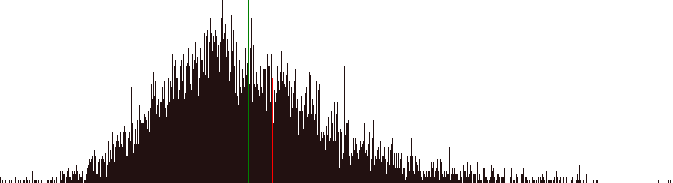

I ran my first half marathon this past Sunday, the

Great Scottish Run in Glasgow.

I've never competitively run further than the 12km Bay to Breakers, but I'd prepared pretty thoroughly. On the day the various suspect joints that I feared might let me down all performed perfectly, but I messed up a bit on the hydration

plan, and an uncharacteristically hot Glasgow left me rather seriously dehydrated by the end (but that's no excuse, particularly for the 5533 people who finished faster than I). I did it in 2 hours 9 minutes, which was about what I estimated beforehand, but I'd have done a deal better had I drunk

properly beforehand.

I'm especially grateful to the kind folks from the Glasgow Sikh community, who set up their own unofficial water station (amid an inexplicable five-mile gap between official water stations) and who had some Kärcher pressure washers to douse the overheated runners.

I've been dehydrated running before, but never this badly, so I was in a pretty feeble condition afterwards. It's unpleasant.

A Google Map showing a GPS trace of the route on Google Maps is here (it's weirdly punctuated in the latter section, I guess due to the water spraying).

The race organisers have placed a CSV of all the half-marathon finishers (with splits) in a machine-parsable file here, so I've had some fun grinding through them (it's not like I'll be walking anywhere today).

Of the 8482 runners who finished (they don't give statistics for non-finishers) 5187 (61%) were men and 3295 women. The male winner was Kenyan runner Joseph Birech in an eye-watering 01:01:26, and the

female winner was fellow Kenyan Flomena Chepchirchir in 01:09:26 (beating, I believe, her PB).

The mean time for the whole field was 02:03:32, with a peak of people finishing around 01:57 (what, did you guys hold hands?). For men only the mean time was 01:57:20, for women only the mean time was 02:13:17

My own time put me 6 minutes slower than the overall mean, and 12 minutes slower than the male mean. A graph showing the distribution of runners' finishing times is below - the overall mean is the green line, I'm the puffy and out-of-breath red one.

In the last 6k I overtook 176 people but 203 people overtook me (so that's a net 27 people who'd taken better care to drink properly). Of these runner #13180, Angus Denham, went from being 5 minutes behind me to finishing nearly 3 minutes ahead. Perhaps Angus is really Batman.

Of the whole race, the most impressive kick (the fastest last section in relation to their first 15k) was by runner #19069, Gary Clelland (splits 00:34:05, 01:20:14, 02:04:03) who seems to have had a horrible middle race, but recovered to do the last 6k in 31 minutes.

Blog: It's time to tune the fire alarms [Tuesday 20th September 2011]

I was up several times last night, and slept very badly, because

one of my smoke alarms was complaining that its battery was dying, and so beeping intermittently. Figuring out

which isn't an easy problem (at 3am nothing is an easy problem) but one that could be

made easier.

BEEP!

There are two alarms in my house. They're mains wired with a 9V battery backup, and when that battery starts to fail (it seems when it gets below about 7.7V) the alarm beeps once, very briefly. The beep is frustratingly irregular, and there's at least several minutes between beeps. There's no

light or other visual indicator on the alarm which is failing. And (as anyone who has heart the siren of an emergency vehicle echoing around the buildings in a densely built up city) it's difficult to spacially locate a high-frequency tone that's echoing off hard surfaces.

BEEP!

The alarms are mounted up high, on the ceiling, they're difficult to remove (push a little tab with a knife while turning the whole thing anticlockwise), and the screwthread is a bit overpainted. There's residual capacitance in an alarm, so even if you remove its battery and disconnect it from

the ceiling supply, it will still muster the energy to beep a few more times. I think I know which alarm it is, so I take it down, open it, remove its battery, find a spare 9V, replace that, restore the detector to the ceiling, and go back to bed. Mission accomplished. All is peace and quiet.

Sleep.

BEEP!

Darn - I must have changed the wrong one. Find another 9V battery. Remove the second alarm (which is much more intransigent than the first), fix it, replace it. Now they're both done. Sleep.

BEEP!

It's 3:30 and this is impossible. Can I have put a bad replacement into one of them - but which? I take them both down, and find the Fluke DVM. How are "normal" people supposed to solve this kind of problem in the early hours of the morning (where "normal" people don't have a stash of 9V

batteries and a digital multimeter) ? I test both "good" batteries - they're showing slightly more than 9V. The two "bad" ones aren't much different - one is 8.3V and the other 8.7V. I don't have any more batteries to try, it's late and I need to work tomorrow, and there's obviously nowhere open

that will sell me two 9V batteries at 4am. I can't think. I disconnect both batteries and leave both alarms on the desk. I pile clothes on the alarms to deaden the sound (knowing they'll beep for a while until their caps have discharged), close all the intervening doors, and go to sleep.

BEEP! BEEP! BEEP! BEEP! all ... night ... long ...

In the morning, when I feel only a little bit more awake, I still can't figure out the problem. There's still a periodic beep, and it can't be either of the alarms. As I stumble around, another BEEP! And then I find it - a third alarm, this time a carbon monoxide detector, hiding in the

cupboard beside the heating system. Its battery is 7.2V, and given the 8.7V one it's happy.

It's stuff like this that makes well-meaning people throw their smoke alarms away, or at least permanently neglect to put batteries in them. I understand why it has to beep, and why it can't flash a permanent light to say it's in trouble (they're trying to maximise the time of protection I get,

even at the expense of my sleep). It's a very simple and cost-optimised device, so it's difficult to think of a sensible way for it to communicate. All it can do is beep.

But do all three detectors have to beep the same? The beep function is a simple piezo electric sounder driven by a simple frequency generator (I guess an XTAL) and gated by a transistor. So:

- At the very least it should be straightforward to substitute a different frequency generator for different detectors in a set. A set of detectors might have one at C, one at G, and one at E♭. A detector bought singly could have a pair DIP switch to set one of four codes.

- If a throwaway novelty greetings card can play a tune, is it really too much cost to have the smoke detector tap out its ID code (maybe two digits, each 1-6 beeps, with a gap between them)? The code can be cooked into the detector, with a little screenprinted decal on the side showing its

code.

- With only a little more cost, make each detector play two notes concurrently with a fixed interval. Right now I'd hope for a nice consonant interval like a major 3rd or a perfect 5th, but I suppose smoke alarms are supposed to sound horrible. So one detector could play a tritone, one a

major seventh, and another a minor second (all with different tonics). Those all sound nasty and urgent, but they also sound different.

Blog: What is a VPN exactly? [Thursday 1st September 2011]

A few days ago, over on Reddit, someone asked "What is a VPN exactly". Wikipedia has a

pretty good, but very technical article on the subject. Instead I

answered with along (and perhaps a tad tortured) analogy. It's reproduced below.

Let me try this analogy out on you (let me know if it makes sense):

- Imagine first that you work for a medium sized company - 300 people in one building. All day long you process paperwork, which you then need to send to other people in the company. Rather than spending all day walking about, you put your intra-office mail into an outbox near your office. A few

times a day a guy with a cart comes round. He delivers mail to you, and takes all the outgoing stuff. The outgoing stuff gets sorted in the mailroom and goes onto the next cart. The address you write on the envelope is a local one; it just says the person's name and their department (not the

company name, or street address, or city, state, or country). That's like a local-area-network.

- You also have to deal with people in other companies. So when you send them stuff, you put on a full public street address, with city and country and postal code. When your mail guy sees that, he puts it in a pile for the public post office. The postman picks it up, and it gurgles through the

public postal system until it gets to the recipient's company. There another postal guy sorts it (with all that company's intra-office mail) and delivers it to the final person. That's like the Internet.

- But now imagine your company expands. They open a new office on the other side of the country, and reorganise so some departments move there, while some stay at the current location. The guy with the mail cart can't walk over there to deliver stuff, so it'll have to go by the public mail. You

could keep a list of which department is where, and if you wanted to mail someone in one of the remote departments you'd treat them as if they were an external person, and write their full address. But that's complicated and inefficient, and lots of people in your building will be sending stuff to

people in the remote building, just like you. So the company says you don't have to change anything. You put the same name and department code you always did. The mail room people know who has moved, and so they build up a pile for stuff going to the remote site. And for efficiency (and often for

security) they bundle these up into one big mailbag, and mail that across the country. It goes on various vans a trucks and planes, sharing them with mail for other companies to other people, and being handled by all kinds of mail workers who're unrelated to your company. At the far end the company

mail people open the bag and treat all the mail inside it just the same as if it was local. So, to both sender and receiver it looks like it's local mail, even though it's gone through the public mail system to get to you. That's like a virtual private network - it appears like the private local

network, but (if you actually follow packages around, how they actually get from A to B) it's over a public mail system.

- That basic VPN, above, is okay, but it has security concerns. If your company mail deals with confidential stuff like sensitive financial documents or secret stuff like stealth fighter components, you'd be worried about all those normal mail workers handling your mail. They could open that big

mailbag and steal the contents, or copy them, or even change them. The people who work at your company have been security checked, but you don't know anything about those regular people in the mail system, and you don't trust them. So your company uses a super-strong kevlar-weave mailbag with a

fancy padlock on it. The mailrooms in both branches of your company have the keys to the padlocks, but the people who work for the public post office don't. So that way, hopefully, your mail won't get read or stolen in transit. With that security done, you can be confident of sending stuff from one

office to the other - it's as safe (if the bag and lock are good) as if you just posted it to someone in another department in the same building. That's an encrypted VPN (really all VPNs are encrypted, so you always have confidence).

- For you working from home, things are much the same, just on a small scale. People post stuff to you as if you were in the office, and the mail people know where to send it, and wrap it in a secure bag.

In all this analogy (I hope it wasn't too tortured) all the mail people, both in your company in in the public postal system, are network routers. The VPN works because your company has told its routers how to do the special work of wrapping up remote intra-company mail in secure wrappers and

readdressing them, and then unwrapping them at the far end. So to partake of a VPN both ends need to have special VPN software, that alters the normal routing scheme to do this. That usually entails your company sites having routers (or servers) that accept requests in the PPTP protocol (that's the

secure bag) and does authentication with CHAP or EAP or the like (that's the padlock). And you need to have similar software on your computer too. With that set up, and with you logged in to the VPN properly, it appears as if your computer is plugged into the network as if you were back at the

office, even though you're in a webcafe in Nepal. Depending on what the VPN is programmed to allow through, you can access network shares or intranet sites, you can even print on a printer back in the office. The VPN hides the complexity and insecurity of the public network (although you probably

notice things are slower).

So yes, a VPN is just what you need. To set one up will need the help of whoever manages your company network. They may choose to configure VPN functionality on existing hardware, or to install additional hardware or software. They'll have to worry about opening a special connection in the

firewall so VPN clients can phone in to establish their sessions, and they'll have to think about how to manage who gets to login and how to authenticate they are who they say they are.

I probably should mave mentioned Peter Deutsch's

8 fallacies of distributed computing, which all apply in this case and which all would apply with a physical mail system (of sufficient complexity) too.

Blog: Monitoring dd progress [Wednesday 20th July 2011]

dd is one of a unix administrator's best friends - it's great for making block-level backups, secure erasure of confidential data, moving a filesystem, or generating test data. But it's pretty common to set

it off on a large job and come back later and have no clue about how much progress it's made. John Newbigin's

dd for Windows has a non-standard

--progress option, but with the standard one it's not so straightforward.

The GNU dd has a nice feature whereby it prints its progress if you send it a SIGUSR1 (e.g. kill -USR1 1234). But if the dd process is wrapped in other processes, or isn't attached to a console, then you can't easily get this information.

Say we're running dd if=/dev/zero of=/dev/sdc

How do we figure out how far it's gone?

To the rescue comes the admin's other best friend, Vic Abell's invaluable lsof

lsof -c dd | grep sdc

reports

dd 2662 root 1w BLK 8,32 0x187e768000 5482 /dev/sdc

The key part of that is 0x187e768000, which is the offset it's reached, in hexadecimal.

To simplify checking that, the following quick and dirty Python script (which takes the filename or device to search for as its only argument) will report the offset field in the lsof output, handily converted to Mb and Gb.

#!/usr/bin/python

import subprocess,sys

if len(sys.argv) !=2:

print 'usage:\n %s <output file or device>' % sys.argv[0]

sys.exit(1)

r = subprocess.check_output('lsof -c dd | grep %s' % sys.argv[1], shell=True)

val = r.split()[6]

if val.startswith('0x'):

base=16

else:

base=10

v2 = int(val,base)

print '%d Mb (%d Gb)' % (v2/1024**2, v2/1024**3)

So if the python script is called dd_progress.py and we run dd_process.py sdc the output will be:

100327 Mb (97 Gb)

Some nice additions: dd can hog the IO bandwidth if given reign, so run it with ionice dd ... so you can still work on the same machine. Rather than continually run dd_progress.py by hand, one can leave it running periodically in another window like this:

watch dd_progress.py sdc

Blog: Games are not realistic (no, really) [Thursday 2nd June 2011]

You really don't

want realistic games, just not stupid ones

If history was like computer games, the Second World War would have ended when Franklin Roosevelt and Winston Churchill sneaked into Hitler's castle (through a ventilation duct) and repeatedly shot a small glowing area of Hitler's neck with their rocket launchers and bakelite laser guns.

If computer games were like history, a game of Starcraft would consist of years of committee meetings about increasing bauxite production and improving the machine tool lubricating oil supply chain, and you'd get an email once a week giving you a statistical breakdown of the friendly and enemy

units destroyed in the conflict.

Blog: Apple and the tiny SIM [Thursday 19th May 2011]

In

this Reuters story, Apple proposes smaller, thinner

SIM cards. But does anyone need a smaller mobile

phone than we already have?

In the short run they're looking at making a phone that's a few mm thinner than the current ones. But in the longer term they're thinking beyond what we currently call a "phone". They're looking at very small form factor devices which keep their data in the cloud, are configured by another

(arbitrary) device which talks to the same cloud, and which make either sporadic or continual data connections with whatever available networks they find, to keep up to date. Imagine very small devices (wristwatches, eyeglasses, earplugs) with 802.11/UMTS/WiMAX radios (which use a mini-sim to

identify themselves to whichever network they encounter). And they're thinking about these things as universal identifiers and payment tokens.

Right now you go running with an iPod. Instead you'll have a iPlug, a pair of little in-ear headphones, but with no cable and nothing strapped to your arm. You set up your music program on a tablet, and it seamlessly syncs. You run further than you'd expected, so the iPlug connects to the

network and downloads more music. Miles from home your knee gives out. You touch the iPlug and say "taxi". A taxi comes (sent by Apple to the location the iPlug knew; Apple gets a dollar from the taxi fare, which you pay using the iPlug).

You have a iSim unit in your iWatch. You're thirsty, so you touch the watch and say "coffee shop". The watch face shows an arrow to a nearby one, and the distance, and walks you there. Apple gets a dollar. You buy a drink with the iSIM as a payment token (Apple gets 30 cents) and sit down at a

table. The table's surface is an active display; it talks to your iWatch and opens a connection to your account in the iCloud. Your personal news appears, your emails, your documents. You do some work, browse some stuff, and when you're done you stand up and the table blinks off. Things will be as

you left them when you next peer with an active display - at home, in the car, on the train, at the office, on the beach.

All of this stuff has been done, in various disconnected ways, already. You can pay for stuff with your phone, in some places. Most Europeans (well, Brits at least) have smart cards in their credit cards. You could hotdesk 10 years ago with a SunRay (kinda). You can unlock doors with a Dallas iButton token. Having super-cheap super-light totally ubiquitous networking makes the whole thing join up into a compelling, powerful, system.

You'll never be alone again.

Adapted from my Slashdot posting here. Several posters astutely point out that tiny devices have tiny batteries and so short lives. For a device that's mostly connected to local (low-power) connections, that isn't

configured to receive calls (which means it doesn't turn the radio on every few seconds to check for messages or calls) and which you habitually dock to recharge every day, this isn't an unreasonable ask.

Blog: Guitar effects on Ubuntu [Wednesday 18th May 2011]

Rakarrack is a brilliant guitar effects processing system for Linux, which provides an impressive range of effects including chorus, flanger, distortion, echo and a bunch of stuff I don't begin to understand.

To use it you need to plug your guitar into your Linux PC. It's possible to do this with a direct connection but the signal will be weak and noisy. It's much better to use a proper boosted instrument connection - there are

many of these, but this posting talks about setting things up with the inexpensive Behringer UGC102 USB device. Various online merchants sell this for around £30.

Once you've got that, the following guide lets you get Rakarrack working in Linux. I've tested these instructions in Ubuntu 11.04 (and it mostly works the same in earlier Ubuntu releases); hopefully things should be much the same in a similar modern Linux like Fedora.

- In Ubuntu Software Centre (or Synaptic, or with

apt-get) install the package rakarrack (which will entail you enabling the "universe" repository, if you haven't done so already). By installing rakarrack you also bring in its dependencies, including JACK audio system and its associated utilities. - Save the following script (say to

rak.sh) and make sure that script is executable.

#!/bin/bash

# The following assumes that the Behringer UGC102 is ALSA device #1

# if it isn't, run aplay -l to see what it is

jackd -R -dalsa -D -Chw:1,0 -n3 &

sleep 1

rakarrack &

sleep 1

# mono Behringer inputs to rakarrack stereo input

jack_connect system:capture_1 rakarrack:in_1

jack_connect system:capture_1 rakarrack:in_2

# rakarrack stereo output to both main outputs

jack_connect rakarrack:out_1 system:playback_1

jack_connect rakarrack:out_2 system:playback_2

# wait for rakarrack to be closed

wait %2

# kill JACKd to tidy up

killall jackd

- Plug the Behringer device into a USB port and a guitar into it.

- In

Terminal run aplay -l which will list the audio devices that ALSA can see. A typical report will look something like this:

**** List of PLAYBACK Hardware Devices ****

card 0: Intel [HDA Intel], device 0: ALC883 Analog [ALC883 Analog]

Subdevices: 1/1

Subdevice #0: subdevice #0

card 0: Intel [HDA Intel], device 1: ALC883 Digital [ALC883 Digital]

Subdevices: 1/1

Subdevice #0: subdevice #0

card 1: default [USB Audio CODEC ], device 0: USB Audio [USB Audio]

Subdevices: 1/1

Subdevice #0: subdevice #0 <

In this case "card 1" is the Behringer device (it may vary on your system).

- If the device is reported as being different from "card 1", change the

1 in hw:1,0 in the script to the appropriate number. - Run the script.

In some configurations, PulseAudio has exclusive ownership of the alsa device, and so jackd can't run. In that case, prepend pasuspender -- to the start of the jackd line in the script. - Rakarrack will appear. You will need to turn on the effect rack (the top-left button, labelled FX On) and generally you need to raise the input level and maybe the output level.

Update: I've make a few demo sounds (you'll have to excuse my terrible playing) giving some idea of Rakarrack's capabilities. They're just named after the default Rakarrack setups that I used:

Summer at the beach

Metal amp

Funk wah

Blog: Pluto, we hardly knew ye [Wednesday 11st May 2011]

On Wikipedia's Science Reference Desk, Dolphin51

points out a beautiful, perhaps sad little fact about Pluto, the little planet that couldn't...

Pluto was discovered in January 1930 - 81 years ago. Pluto takes 248 Earth years to make one orbit of the sun. So in all the time that we have known about Pluto, conferred ''planet status'' upon it, then

removed that status, it has covered only 117 degrees of arc around the sun, one-third of an orbit, or one-third of a Pluto year.

Blog: On credit card data theft [Monday 9th May 2011]

The recent theft of customer data from the

Sony Playstation Network computer system shows the continuing problem faced by anyone who has lodged their credit card information at an online merchant and allowed

automated repeat billing. It's quite reasonable for someone to have a dozen or so such ongoing relationships, and for their credit card details to be stored in the systems of as many retailers again (particularly those that check the "keep my details" box by default).

Merchants that make repeat payments on the same credit card have to keep the customer's credit card info around. This makes breaking into them a tantalising prospect for any attacker, as the merchant stores enough information to allow fresh payments to be made at any merchant, or for fake

physical cards to be manufactured. This posting discusses two proposals that allow merchants to make automated repeat payment requests on the same credit card, but without the merchant's permanent database storing that crucially re-usable credit card information.

In the examples below, the card data (CD) is a record containing the cardholder's name and the card's number, cvv, and expiry date.

1. The public-key cryptography method

- The merchant receives CD from customer

- The merchant builds a merchant+CD packet by concatenating their merchant ID (mID) with the data in the CD:

mCD=mID+name+expiry+number+cvv - The merchant encrypts the mCD with the acquirer's public key. EMCD = Eacquirer-pub(mCD)

- The merchant then forgets the name, number, and cvv fields. They keep the expiry around so they can prompt the customer to renew their card details when the card is close to expiring.

- The merchant builds their ordinary payment-approval request to the acquirer by concatenating the EMCD with details specific to the transaction (chiefly date and amount) and sends this to the acquirer over the normal encrypted channel.

- If the acquirer approves the transaction, the merchant retains the EMCD in their database along with the expiry date. For future transactions they build the payment-approval request as above, without rebuilding the EMCD.

B. The payment-token method

- The merchant receives CD from customer

- The merchant builds the normal approval transaction and sends this to the acquirer as normal.

- The acquirer approves the transaction, and as part of the approval returns a repeat-payment-token (RPT), a lengthy random number.

- The merchant forgets all the CD except the expiry, and stores the expiry and RPT in their database.

- For subsequent transactions, the merchant builds a repeat-payment-request transaction, and instead of the CD it uses the RPT. On receipt of this, the acquirer checks that the corresponding account is valid (in the normal way) and verifies that the submitting merchant is the same as created the

RPT. If the mID mismatches, the payment is declined.

In both schemes the merchant does not retain a permanent record of the customer's credit card data (bar its expiry). So if the merchant's system is compromised, and the attacker gains access to the list of customers, including all the data the company stores on them:

- these cannot be turned back into bare credit card info, as the merchant, and the attacker, don't know the acquirer's private key. So the attacker cannot generate fresh payment transactions with another merchant or manufacture a valid physical card.

- armed with the EMCD/RTP the attacker can create further transactions with this merchant, but as the merchant's stored info is specific to that merchant, it can't me used to generate transactions at another merchant.

The public-key method requires the acquirer and merchant maintain a public-key infrastructure, including key distribution, that they would not otherwise. The payment-token method does not, but requires the acquirer to store payment state for each (merchant,customer) pair.

Neither method protects against the same merchant being fooled into producing unauthorised transations, but it firewalls the damage caused by a single merchant's system being compromised to that merchant.

2010

Blog: Left Below [Thursday 16th September 2010]

The end of the world is nigh. From

The Road to

Fallout to

World War Z and

Zombieland, we're being trained to live in the world after The Catastrophe, when we have been (as the Simpsons would have it)

Left Below.

Once thing is clear: if you're one of the few survivors, nothing more useless than an electric car. At least in the short to medium term, you're going to need a good old fashioned petrol car. You can siphon fuel from other vehicles and from the tanks of petrol stations. If this is a real

apocalypse (which means almost everyone is dead) rather than a mere zombie mishap, there is essentially enough fuel for you for the rest of your life.

In the longer term (maybe a generation or two, or much less if there are more survivors) then probably a diesel car adapted to burn biodiesel is the way to go, providing you can get the feedstock for that.

But plugging your Nissan Leaf into a windmill? Only if you live beside a windmill, and don't plan on being 40 miles from it ever again.

Blog: Left 4 Dead 2 pricing and the future [Wednesday 7th April 2010]

Valve has lowered the

Steam pricing of their zombie holocaust shooter

Left4Dead2, bringing its UK price to £20. As with much of Valve's perpetual twiddling with online pricing models, it's at once clear that they're up

to something, but not at all clear what that might be.

Left4Dead2's expansion "The Passing" is due for release fairly soon; this pack will, at least for PC users, be free. So they're doing their usual thing, adding value to an existing property and stretching that long tail out

further.

Valve's physical distribution is handled for them by Electronic Arts, who (along with the physical retailer) will take a sizeable chunk of the proceeds, and who (if they have any sense) will have some deal with Valve preventing them from undercutting the retail channel with their own online

service, at least for a while.

Valve has been very experimental about pricing and packaging, and they've done an excellent job of pushing Steam out to a pretty wide footprint, and teasing us with those darn £5 game deals. While it's always nice to make £5 on some old game, when you'd otherwise not have sold anything, it's

much nicer to take all off a full-price release, and not have to share any with a distributor, a retailer, or have to spend money on little disks and manuals and other junk. So I think it's only a matter of time before Valve try their big experiment - can they make more money selling a title

only on Steam, with no physical channel at all? It's clearly where the market is going, they're in a better position than anyone to deliver, and they have two prospective titles that have people champing for the (Portal 2 and the ever elusive Half-life 2 episode 3). Both have

an established userbase that's eager for more, and all those people already have Steam. And how many people in either product's market are stuck on no-internet-ever or dialup-only machines; Valve (who see the results of Steam's occasional hardware surveys, which ask just this) know, and you can bet

it's a small and diminishing group. The question they have to ask, when embarking on an online-only distribution, isn't "can we sell more online", or "can we take more turnover online", but simply "can we make more money selling online - does the less profitable physical distribution just undercut

sales we'd get online anyway?" Providing both games are good (and again Valve have an excellent track record) they don't have too much to lose - sell online for a month and if that doesn't pan out, cut a retail deal anyway.

Sooner or later they're going to take a punt at this, unless EA or whomever chucks a wedge of money at them to shore up a leaky business model. So that's my prediction - HL2EP3 sold in one market (let's say the US) online only at introduction, with a physical release later or not at all. It's

Valve's crunch time - do you want to work for EA, or be EA?

Blog: On vacuuming [Wednesday 10th February 2010]

I just did the vacuuming (what we get to call hoovering, but maybe not

Hoovering, in the UK). It's a boring business, and one that seems like an anachronism. Why is it like this?

2009

Blog: Dead Borders walking [Monday 30th November 2009]

Borders (UK) is going out of business. On visiting a branch today, in a vain attempt to pick the corpose for any kind of worthwhile bargain, I left empty handed, surprised they stayed in business as long as they have.

I have a house full of books, and a storage locker somewhere with many more, some of which I've actually read. I love books, which means I should love book shops too. But I don't love Borders, and I won't mourn its passing.

My "local" Borders is in a hideous retail carpark with a collection of other dead-eyed boxes, where nothing ever happens and there's no reason to visit unless you really want something or truly have nothing to do. It's half an hour drive for me, and half an hour for everyone else.

The shelves tell a sorry story The "new age" section is three times the size of the "science" section (and "science" includes the lightest of popular science, all of maths and engineering, and anything DIY that looked harder than putting up shelves). "Self help" is twice the size, as is

"religion" - and "religion" is barely about religion at all, but mostly self-help books that cravenly invoke religion to sell themselves ("Jesus wants to you be thin"). When there's more books about the Christian way to stop smoking than there are bibles, and no sign of Aquinas or Boethius or

Luther or St.Teresa, it's another sign that they're beyond redemption (so to speak).

Eight quid for a 16 page kids book? A huge collection of 2009 calendars with newspaper cartoons about slippers? Discount books that don't compete with Bargain Books who pay a tenth what Borders do for retail space? Old DVDs with prices that seem designed to compete with Virgin Megastores (look

how well they did) - £9 for Casablanca? £11 for Fast Times at Ridgemont High? £12 for some thing with Jack Black in it?

Front of house, their pride and joy, Borders' strategic reserve of unsold crap. Ghostwritten celebrity junk: all called something like "My Life" or "My Way", supposedly written by someone whose life has barely started and whose only achievement is being on some program on Channel Five where they

were the outstandingly stupid person among a group of troglodytes carefully chosen by professional psychologists as being the worst possible. TV tie-ins. Celebrity recipies. Celebrity detox. Celebrity diets. Celebrity confessions. Contrite celebrity "when I got out of jail all the money was gone"

sob stories. Things one guy from Top Gear likes, and things the other guy from Top Gear hates. I am Jack's raging bile duct. And I swear the prices for this junk have gone up.

People will tell you that Borders, who happily crushed the smaller bookseller when times were good, has in turn been crushed between Tesco and Amazon. This is true, but Borders went along with it. It's no surprise that Tesco can shovel junk better and cheaper than anyone, or that prime retail

space isn't best used for selling off old calendars and discount books. With its convenience and range and prices, Amazon is darn hard to compete with, but Borders didn't innovate and really didn't look like they were trying. Relying on the "Grandma doesn't buy books off the internet" theory was a

loser for them, because Grandma turned out to be smarter than they thought.

Still, the venture capitalists who own them will have plenty of "Chicken Soup" books to read inbetween insolvency meetings.

2008

Blog: The Sherlock Holmes inversion [Sunday 28th December 2008]

Sherlock Holmes stories are told from the perspective of Dr John Watson. Sherlock is a genius, a strange mercurial creature that is somehow above the rest of us. But if the stories were told from Sherlock's perspective, they'd be

Idiocracy.

2005

Blog: Buildscripts and stuff [Friday 14th October 2005]

Ah, there's nothing like making life difficult for yourself. This site, you see, is maintained by a script of my own creation. For a very long time it's been a cygwin bash file, which at its heart is a massive find statement. This finds a bunch of html-like source files and

runs them through an html preprocessor. I use

iMatrix' HTMLPP - I can't say I particularly recommend it (it seems there's no ongoing development on it, it's a bit crufty and requires a bunch more stuff in the templated document than I'd really wish.

Ideally I'd move to a modern, supported templating system (people have recommended Clearsilver to me, and it looks nice), but the effort of changing all my sources files (of which there are around forty, plus some files that are built automatically) would be a bunch of work. The bash script which

drives this thing grew and grew, doing automatic image thumbnailing, having support to host source code files (importing them from a nearby live sourcetree and formatting them nicely), and having some

basic blogging support.

Imagemagick handled the image scaling.

A while ago I started rewriting in python, still on cygwin. I really don't know why. It was worth it, I suppose, but more complicated than I'd hoped. In a final push yesterday and today I finally finished it, and cut its dependency on external utilities that cygwin provides (sed and find and

stuff). So now it runs natively on Windows or Linux, which is a relief. Cygwin is fine as long as you stay inside the little cygwin box - but if you have to call in and out of it then you run into endless little filename-escaping issues.

The python version is certainly more verbose than its bash counterpart, but much easier to read (and, I hope, to maintain in future). Image scaling is now done by PIL rather than imagemagick (I just couldn't get PIL to work properly on

cygwin's python). Where I used hacky sed calls to do some text substitutions I now do some really botched python regexps instead (I'm more ashamed of them that I was of the sed ones, which is saying quite a lot). The whole thing has about as many lines of code (although far fewer

backslashes) and takes about the same time to run. But for exactly one line (a shell variable expansion in code called inside the macro system) it's entirely portable (damn, I'd forgotten that hideous DOS %var% syntax). I still use htmlpp to do template expansion, html tidy to fix any html snafus,

and linklint to verify that there aren't any bad links. Blogs are still built in an overly manual way, and there's still no RSS syndication.

I keep wondering whether to shift the blogging support to Wordpress (which I've goofed around with, and which couldn't be easier). I guess I'd put the Wordpress content into an iframe (where the current static blog appears now) as I don't really want the entire content served from Wordpress. I'm

squeamish about dynamically generated content when static would do, so I guess I'll have to figure out how to get it to produce static content. Urgh, I guess that's another hundred lines into the buildscript.

Blog: Backgrounds and their fans [Friday 19th August 2005]

Our morning glories are out today (and will be gone tomorrow), so I took some pretty nice photos of them. I've added the best (the plainest) as a new

background.

In checking through my webserver logs I've discovered that several teenager use my images for the backgrounds to their weblogs. Technically it would be better if they downloaded the images to their own website (instead of using up my paid-for bandwidth), but they seem like sweet people, so I'm

not going to hassle them about it. If I was particularly nasty I could write some code that checks the referrer on requests for those images, and send visitors to their site (but not mine) something horrible like the goatse man.

It's a compliment, I guess, so it'd be churlish to complain. The thing about copyright is that if I fail to defend it, someone might claim that I've abrogated my right to do so in future. The last thing I'm going to do is send a nasty cease-and-desist letter to these folks, so here's the smart

solution - the following myspace users are hereby granted licence to use images from this website on their personal weblogs:

(boy, you guys have some really overproduced websites)

Blog: Flowers [Sunday 7th August 2005]

I've been looking after my neighbour's greenhouse and the plants on his deck while he's on holiday. The weather has been lovely, which makes for much watering to be done. Although the neighbours are missing the display, I'm enjoying the flowers.

Here's some photos:

Blog: Improving how MediaWiki handles anons (users who haven't created an account) [Monday 4th April 2005]

MediaWiki, the open-source software which runs Wikipedia and many other wiki websites, allows users to edit without creating an account. This exposes these new editors' IP addresses, and makes it impossible to later associate the initial work they do with an account they

subsequently create. This proposal suggests a cookie-based scheme which aims to ameliorate some of this.

Principles for a new version

- The barrier to entry should be no higher than now

- The degree of privacy afforded to all users should be no less than now